Scalable Content Moderation for a Global UGC Platform

4/13/20251 min read

The Challenge:

A fast-growing digital platform enabling user-generated content (UGC)—including text, images, and short-form videos—faced a growing challenge: inappropriate, harmful, or offensive content slipping through automated filters. With user volume skyrocketing, their AI models alone couldn’t handle the nuanced judgment required for gray-area content like hate symbols, veiled abuse, and borderline adult imagery.

Their moderation backlog was increasing, and the platform was at risk of violating community standards, damaging brand trust, and facing regulatory scrutiny in multiple countries.

Our Solution:

LabelCo.AI deployed a multi-layered human-in-the-loop content moderation system, custom-tailored to the client’s content policies and risk matrix. Our approach involved:

Project Breakdown:

Onboarded a team of 10+ multilingual annotators and reviewers with experience in content moderation and community management.

Moderated across text, image, and video formats, using our secure platform integrated via APIs.

Categorized content into granular labels: hate speech, nudity, violence, fake news, spam, bullying, and self-harm risks.

Introduced real-time triage for flagged videos and live streams with a <5-minute response time.

Designed escalation workflows for high-risk content requiring further review or legal notification.

Quality & Compliance:

Annotators were trained on cultural sensitivities, international regulations (GDPR, IT Rules, COPPA), and client-specific guidelines.

Our QA team conducted daily sampling reviews, conflict resolution, and AI audit trails to track edge-case decisions.

Deployed sentiment and intent classification to detect passive-aggressive tone and coded language.

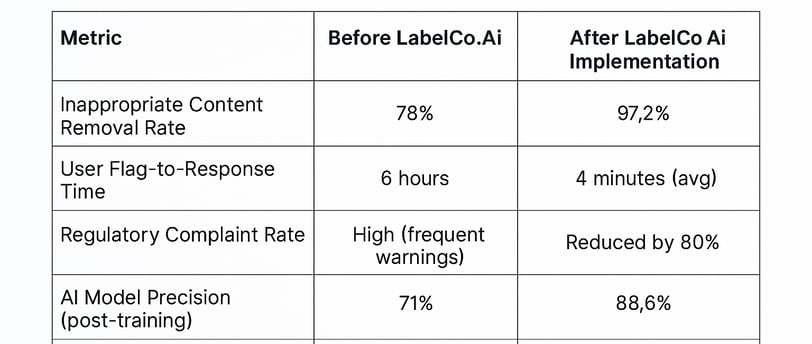

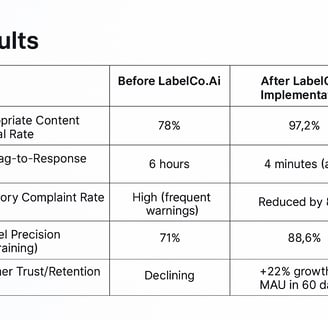

Impact:

Enabled safe scale-up from 1M to 4 M users in under 6 months

Supported international expansion by handling multilingual, culturally sensitive content

Reduced legal risk by aligning moderation workflows with regional policies and age-gated content rules

Improved AI model performance through human-labeled ground truth data

Conclusion:

By partnering with LabelCo.AI, the client transformed its moderation system from reactive to proactive, scaling safely and ethically. The project proved that AI + Human judgment = the gold standard for content integrity.

LabelCo AI

Expert data annotation for AI and machine learning.

Contact

HELLO@labelco.ai

+91-9711151086

Get a custom quote for your annotation project

© 2025. All rights reserved.