Content Moderation

Keep Your Platform Safe, Respectful, and User-Friendly – Powered by Human + AI Moderation

At LabelCo.Ai, we help businesses build safer, smarter digital spaces by combining the precision of AI with the judgment of real people. Our content moderation services ensure that everything your users upload—from text and images to videos and comments—is carefully reviewed, labeled, and flagged when necessary.

Whether you're running a social platform, e-commerce marketplace, or community app, we help filter out harmful, offensive, or irrelevant content—while protecting freedom of expression and user engagement.

What is Content Moderation?

Content moderation is the process of screening and reviewing user-generated content (UGC) to determine if it complies with platform rules and community standards. It includes flagging hate speech, nudity, fake news, scams, violence, or abusive behavior—and ensuring only safe, appropriate content goes live.

At LabelCo.Ai, we combine AI-powered automation and trained human reviewers to deliver round-the-clock, high-accuracy moderation for platforms of any size.

Why Choose LabelCo.Ai?

100% secure and compliant workflows (GDPR-ready)

Culturally aware, multilingual moderation teams

Real-time or batch moderation options

Balanced human + AI moderation models

Scalable across thousands to millions of data points

150+

250+

Annotators Onboarded

Happy clients

Importance of Content Moderation

With growing volumes of user-generated content, manual review isn’t enough—and automation alone isn’t perfect. That’s where LabelCo.Ai steps in. Our expert moderation services let your community grow, while keeping it safe, respectful, and brand-aligned.

Protect Brand Reputation

Content moderation helps prevent harmful, offensive, or inappropriate user content from appearing on your platform—protecting your brand image and maintaining user trust.

Ensures Users Safety and Community Health

By removing hate speech, bullying, explicit material, and misinformation, moderation creates a respectful and inclusive environment that encourages positive user engagement.

Moderation ensures your platform follows local and global laws (like IT Rules, GDPR, and COPPA), avoiding legal risks, regulatory fines, or platform bans.

Maintain Global Legal Compliances

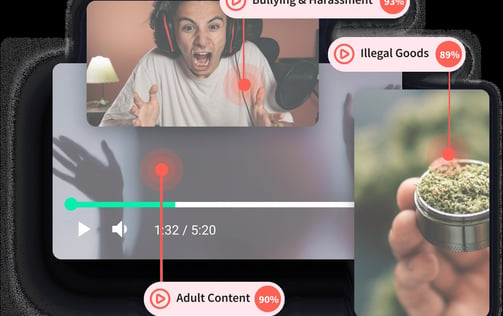

Content moderation plays a crucial role in identifying and filtering out harmful or inappropriate material, including:

Violent or graphic content

Hate speech and discriminatory language

Profanity and sexually explicit references

Inappropriate or offensive images

Substance abuse visuals or mentions

Nudity and sexually suggestive content

Racist or religiously offensive remarks

Political extremism and radical ideologies

Misinformation, fake news, and online scams

Signs of mental health distress or PTSD-related content

Any other user-generated content that violates platform guidelines

To maintain a safe and positive environment, routine content moderation audits are essential. These checks help ensure harmful content is removed while maintaining a respectful user experience. When AI algorithms are part of the process, it becomes even more critical to review decisions for fairness and accuracy, as biased data can impact moderation outcomes.

Leading tech platforms and social media companies conduct regular audits to uphold community safety, trust, and engagement across their ecosystems.

Image Moderation

What it is: We analyze and filter images for nudity, violence, offensive symbols, and copyright violations.

Why it matters: Protects users, advertisers, and brands from reputational damage.

Where it’s used: Dating apps, UGC platforms, marketplaces, social networks.

Video Moderation

What it is: We review video content frame by frame (or using AI models) to flag inappropriate visuals, speech, or context.

Why it matters: Prevents the spread of harmful or explicit content before it reaches audiences.

Where it’s used: Streaming platforms, live content apps, gaming sites.

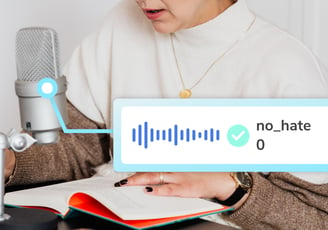

Audio Moderation

What it is: We transcribe and analyze audio clips, voice notes, or spoken content for violations.

Why it matters: Detects inappropriate or offensive language not visible in visual formats.

Where it’s used: Voice apps, podcasts, call center data, smart assistants.

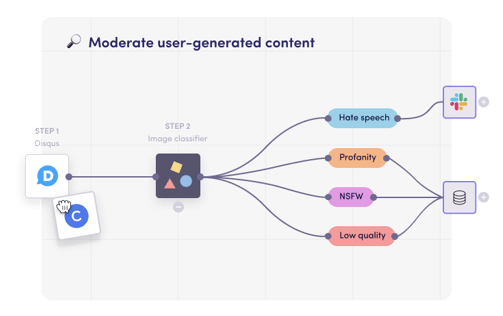

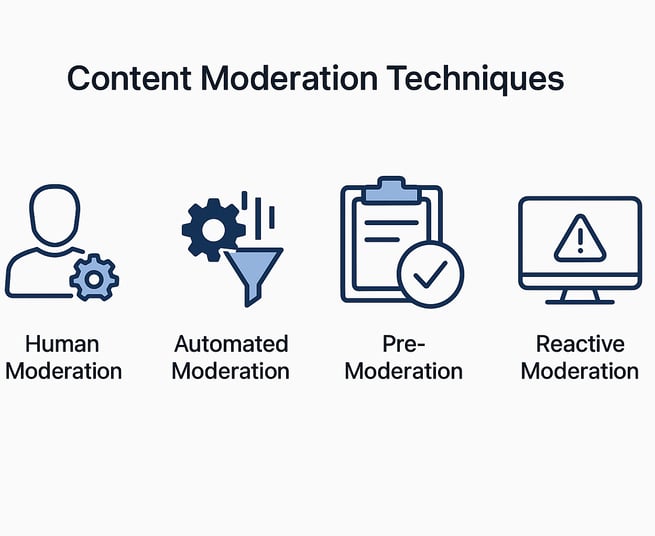

Moderation Techniques We Use

At LabelCo.Ai, we combine advanced AI tools with skilled human reviewers to deliver smart, scalable, and context-aware content moderation.

We use AI-driven keyword filters, image recognition models, and sentiment analysis to automatically flag potentially harmful content. Our human-in-the-loop teams then review edge cases, make context-based decisions, and apply custom moderation rules based on your platform’s guidelines.

We support real-time moderation for fast-moving platforms and batch processing for large datasets. Our workflows ensure every piece of content is accurately reviewed—across text, images, videos, and audio—in multiple languages and cultural contexts.

Industry Use Cases of Content Moderation

1. Social Media & UGC Platforms

Why it matters: These platforms handle millions of posts, comments, photos, and videos daily.

Moderation Focus: Hate speech, cyberbullying, nudity, misinformation, harassment.

Goal: Protect users, maintain community standards, and avoid platform bans or backlash.

2. E-commerce & Online Marketplaces

Why it matters: Users submit product reviews, images, and seller content that can be misleading or offensive.

Moderation Focus: Fake reviews, counterfeit products, offensive images, profanity.

Goal: Maintain trust, protect brand integrity, and comply with regional commerce laws.

3. Gaming & Streaming Platforms

Why it matters: Gamers interact via chat, voice, and live video—often in unmoderated environments.

Moderation Focus: Toxic behavior, harassment, profanity, violent/explicit content.

Goal: Ensure safe interactions, especially for minors, and protect advertisers.

4. Dating & Social Apps

Why it matters: Sensitive platforms often targeted by fake profiles, explicit content, or harassment.

Moderation Focus: Nudity, sexual content, fake identities, abuse, scam attempts.

Goal: Ensure genuine, respectful engagement and user retention.

5. EdTech & Online Communities

Why it matters: These platforms are education-focused and must remain safe and inclusive.

Moderation Focus: Plagiarism, misinformation, bullying, off-topic or disruptive content.

Goal: Preserve a constructive learning and collaboration environment.

6. Healthcare & Wellness Platforms

Why it matters: User-generated health content can spread misinformation or trigger sensitive users.

Moderation Focus: Inaccurate health advice, triggering mental health content, fake testimonials.

Goal: Uphold factual accuracy, build user trust, and follow health communication standards.

Frequently asked questions

What types of content does LabelCo.Ai moderate?

Answer:

LabelCo.Ai moderates text, images, video, and audio content. We handle everything from chat messages and comments to product images, social media posts, live streams, and voice data.

Do you offer real-time content moderation?

Answer:

Yes. Our API-based moderation system supports real-time content filtering with AI pre-screening and human-in-the-loop (HITL) escalation for high-accuracy decision-making—ideal for platforms with fast-moving content.

How accurate is your moderation?

Answer:

We combine smart AI models with human review to deliver up to 99% accuracy, especially for complex or context-sensitive cases. We also offer multi-stage quality checks and ongoing model training to ensure consistency.

Can I customize moderation rules for my platform?

Answer:

Absolutely. We work with you to define custom content guidelines and moderation parameters tailored to your audience, industry, and regional compliance needs.

Is LabelCo.Ai’s content moderation GDPR-compliant?

Answer:

Yes. We adhere to global data privacy laws, including GDPR, CCPA, and IT Rules. All data is handled securely, with NDA-backed workflows and strict confidentiality protocols.

LabelCo AI

Expert data annotation for AI and machine learning.

Contact

HELLO@labelco.ai

+91-9711151086

Get a custom quote for your annotation project

© 2025. All rights reserved.